Data used to construct an IPPOTrainer instance More...

#include <PPOTrainer.h>

Public Attributes | |

| IGraph * | graph = nullptr |

| The graph we are training. This should contain the policy network and value network. More... | |

| ICuriosityModule * | curiosityModule = nullptr |

| Optional curiosity module for additional exploration rewards More... | |

| const char * | valueNodeName = "" |

| The name of the output of the critic node. This node should be a linear layer with one output neuron and no activation function. More... | |

| float | valueCoefficient = 1.0f |

| How much the value contributes to the loss More... | |

| float | entropyCoefficient = 0.01f |

| How much entropy contributes to the loss. Entropy is a measure of how random our output is. At the beginning of training, we want random output, but towards the end we want less random output so that we choose paths we know work. More... | |

| float | policyClipEpsilon = 0.2f |

| Range (percent) that we allow the policy to change within in one step. More... | |

| float | gaeLambda = 0.95f |

| Multiplier on top of gamma. More... | |

| int | trajectorySize = 2048 |

| How many rows of data we should wait for before training More... | |

| int | batchSize = 32 |

| How many rows of data we should train in a single batch. More... | |

| int | epochCount = 10 |

| How many times we should train over the trajectory. More... | |

| bool | normalizeAdvantage = true |

| If true, the advantage (actual reward - expected reward) is normalized by subtracting the mean and dividing by the standard deviation across the trajectory. More... | |

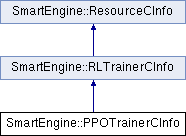

Public Attributes inherited from SmartEngine::RLTrainerCInfo Public Attributes inherited from SmartEngine::RLTrainerCInfo | |

| IContext * | context = nullptr |

| The context to perform graph operations within. More... | |

| IAgentDataStore * | dataStore = nullptr |

| The data store used to save experience state More... | |

| const char * | agentName = "" |

| Should be a unique name across the data store More... | |

| const char ** | policyNodeNames = nullptr |

| The names of the output nodes of the actor (the network used to manipulate the environment). Only the output nodes need to be specified. They should match the actions provided by the agent. More... | |

| int | policyNodeNameCount = 0 |

| The number of elements in the policy node name array More... | |

| float | gamma = 0.99f |

| Reward decay over time More... | |

| GradientDescentTrainingInfo | trainingInfo |

| Gradient descent training parameters More... | |

| int | sequenceLength = 1 |

| LSTM sequence lengths. Can be ignored if there is no LSTM in the graphs. More... | |

Public Attributes inherited from SmartEngine::ResourceCInfo Public Attributes inherited from SmartEngine::ResourceCInfo | |

| const char * | resourceName = nullptr |

| Optional resource name that will be used with Load() and Save() if no other name is provided. More... | |

Detailed Description

Data used to construct an IPPOTrainer instance

Member Data Documentation

◆ batchSize

| int SmartEngine::PPOTrainerCInfo::batchSize = 32 |

How many rows of data we should train in a single batch.

◆ curiosityModule

| ICuriosityModule* SmartEngine::PPOTrainerCInfo::curiosityModule = nullptr |

Optional curiosity module for additional exploration rewards

◆ entropyCoefficient

| float SmartEngine::PPOTrainerCInfo::entropyCoefficient = 0.01f |

How much entropy contributes to the loss. Entropy is a measure of how random our output is. At the beginning of training, we want random output, but towards the end we want less random output so that we choose paths we know work.

◆ epochCount

| int SmartEngine::PPOTrainerCInfo::epochCount = 10 |

How many times we should train over the trajectory.

◆ gaeLambda

| float SmartEngine::PPOTrainerCInfo::gaeLambda = 0.95f |

Multiplier on top of gamma.

◆ graph

| IGraph* SmartEngine::PPOTrainerCInfo::graph = nullptr |

The graph we are training. This should contain the policy network and value network.

◆ normalizeAdvantage

| bool SmartEngine::PPOTrainerCInfo::normalizeAdvantage = true |

If true, the advantage (actual reward - expected reward) is normalized by subtracting the mean and dividing by the standard deviation across the trajectory.

◆ policyClipEpsilon

| float SmartEngine::PPOTrainerCInfo::policyClipEpsilon = 0.2f |

Range (percent) that we allow the policy to change within in one step.

◆ trajectorySize

| int SmartEngine::PPOTrainerCInfo::trajectorySize = 2048 |

How many rows of data we should wait for before training

◆ valueCoefficient

| float SmartEngine::PPOTrainerCInfo::valueCoefficient = 1.0f |

How much the value contributes to the loss

◆ valueNodeName

| const char* SmartEngine::PPOTrainerCInfo::valueNodeName = "" |

The name of the output of the critic node. This node should be a linear layer with one output neuron and no activation function.